Unlock the Full Potential of AI with

Contextual Machine Learning (CML)

The Context Window Breakthrough

Most AI models today—like the Transformers underpinning GPT—struggle to remember far into the past or process large amounts of history. Their “context windows” are limited and scaling them up requires huge compute resources.

Contextual Machine Learning (CML) changes the game by enabling vastly larger context windows, so models can process long sequential datasets without sacrificing speed or efficiency.

Quantum-Inspired Efficiency—On GPUs Today

Originally designed for quantum processors (QPUs), CML’s algorithm leverages quantum contextuality to store and recall more information with fewer parameters. We’ve adapted this approach to run on commercial GPUs, delivering quantum-inspired advantages today and ready for native QPU acceleration in the future.

This means faster runtime, smaller models, and better memory scaling—with applications from genomic analysis to real-time signal processing.

| Feature | Benefit |

|---|---|

| Expanded Content Windows | More accurate predictions on long sequences |

| Smaller models | Deploy on edge GPUs and NVIDIA Jetson |

| Faster runtime scaling | N³ vs. N⁴ complexity in comparable models |

| Quantum-ready | Even greater speedups on future QPUs |

Setting New Records in Real-World Tasks

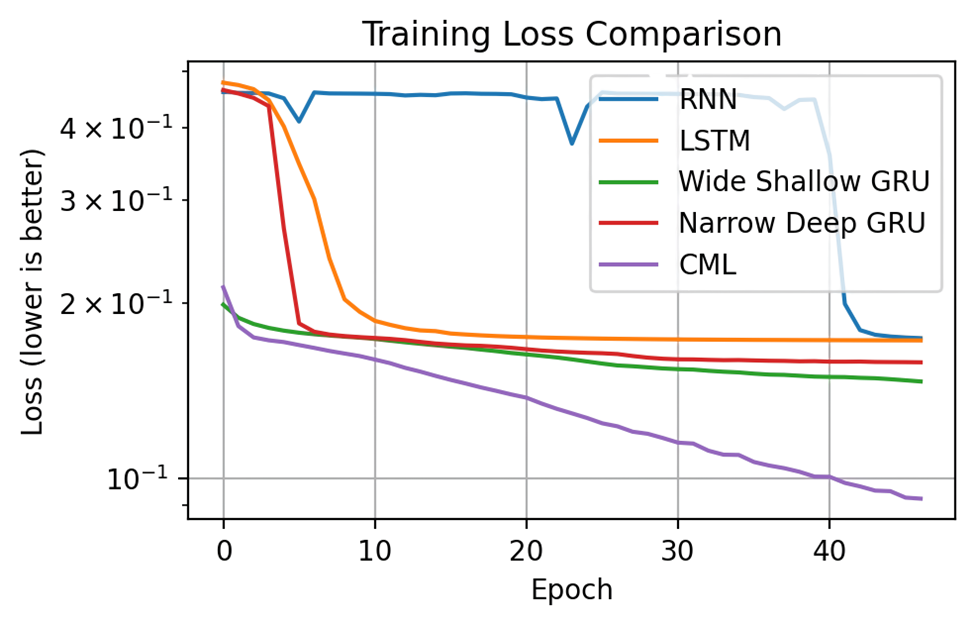

CML consistently outperforms state-of-the-art models in tasks that demand long-range memory.

- Sequence Translation: Matched Transformer performance for Spanish-to-English translation using 10× less memory. Read more.

- Genomics: Achieved 86.7% accuracy on mouse enhancer classification—beating Transformers, HyenaDNA, and DNABERT—with less than half the parameters. (Internal data).

- RF Data Processing: Delivered 32× compression with ~94% fidelity for demodulation and 4× compression with ~94% accuracy for classification. (Internal data).

| Model | Accuracy | Pre-training | Size |

|---|---|---|---|

| CNN | 69.0% | N | 3 layers with 16, 8, 4 filters |

| DNABERT | 66.9% | Y | 110M params |

| Transformer | 80.1%/80.1% | Y/N (same accuracy) | 530k params |

| HyenaDNA | 85.1%./84.7% | Y/N | 440k params |

| CML | 86.7% | N | 240k params |

Where CML Delivers the Biggest Impact

CML’s extended context capabilities open new possibilities across industries:

Autonomous Driving

Detect anomalies and forecast events from long sequences of driving data.

National Security & Resilience

Process long-range sensor data streams for Intelligence, Surveillance, and Reconnaissance (ISR).

Energy & Resource Exploration

Perform long-range demand forecasting for power grids.

Life Sciences & Drug Discovery

Analyze genomic datasets with billions of base pairs.

Proven in Mission-Critical Environments

We’ve already put CML to work in high-stakes applications:

- NVIDIA Collaboration: CML debuted at NVIDIA GTC and runs on NVIDIA Jetson edge platforms.

- Quantum-Inspired Rapid Context (QuIRC Project): Enhancing real-time RF signal processing for greater situational awareness and operational efficiency in collaboration with the U.S. Navy.

- Secure AI for PNT (SAPIENT) Platform: Multi-sensor fusion system for navigation and intelligence—leveraging CML to process large sensor data streams on small form factor GPUs. Infleqtion won first place out of 133 companies in the U.S. Army’s xTechScalable AI competition for SAPIENT.

Dive Deeper into CML Technology

Explore the science that inspired CML and see how quantum-inspired AI is redefining the limits of machine learning.

Get In Touch

Your path to quantum advantage starts here.